Migrating an applicaton from AWS+docker-machine to ECS (and back)

Introduction

I'm following my own old advice to write out the thoughts when they are too vague to be directly converted into actions.

Well. The first part of a situation I found myself in is that when you don't care about your infrastructure you'll find yourself, obviously, using outdated solutions. Which does not mean "bad" but... when trying to integrate them with newly developed features... some strange creatures may appear.

Illustration from the classical art

Back in a day, more precisely, in May 2020, using an AWS host provisioned by docker-machine looked as a good (a.k.a. simple and fast) solution for my (handicapped) pet project. Recently, I run into some errors while deploying the next version of this still pretty humble project, so I've sshed to the host and found out that it runs Ubuntu 16.0.4 and Docker 19.x.x which is, mildly said, outdated...

Ofcourse I can downgrade some of services... but yuy... it's not our way, right? We, as a humanity, and even the tiniest human within it, should move forward, right? So.

docker-machine upgrade my-handicapped-pet did not give any effect because seems that is already latest docker for this distribution.So, first I have to upgrade host OS. Then, another issue is that my AWS EC2 instance is in Singapore (cos I stayed in Southeast Asia at the time) and I'm and my (potential) clients are in Americas. Good chance to migrate as well. Yeah?

I tried to search how to upgrade host OS for docker-machine and found out that repo isn't longer supported. And there are no much information at all. So, one more item to the snowball, now I have find a more adequate orchestration tool. Well good start, once had run into a small issue rebuild all infrastructure. Here, my dear (imaginary) reader, I write before doing any action, I'm still investigating, so, here will be more links than direct steps. I write that all because it is better than procrastination but easier and less hm... energy-consuming... than doing actual things. Hopefully I'll come finally to the action plan to later execute and verify it..

Comparing different solutions for provisioning, ECS (Elastic Container Service) appealed to me, I thought even before that being nailed to a single VPS isn't very good. My app may benefit from being distributed and potentially scaled, such things as proxy and crawlers. But... let leave it for future. For now I just need to fix deployments. From one hand ECS will leave me in AWS while lifting the need to provision the host and related stuff such as disk and network, from the other hand it will lock me to Amazon even worse (and probably make me to pay for Jeff Bezoss' more cups of coffee a month... yeah Jeff you are a badass...) but let accept the risk for now.

So, enough rant, let get into the concrete steps.

Prerequisites

What I already have, is an app that runs under docker-compose, with command like:

docker compose -f docker-compose.yml -f docker-compose.dev.yml build --build-arg DATABASE_URL="mongodb://172.17.0.1:27017/sadist"docker compose -f docker-compose.yml -f docker-compose.dev.yml up

And to stop it:

docker compose -f docker-compose.yml -f docker-compose.dev.yml down --remove-orphans

(files and args are not important, that is how I parameterize my environment).

I'm following these instructions seemed pretty similar to my case.

Registry

What I don't have, is docker registry. I build my images and right away run them (separately for both staging and prod.) So they only persist on the target host. Let go registry. So, first that I have to is to prefix image names with my (fancy) user name.

For instance:

version: '3'

services:

flask:

image: myhandicappedpet/webapp-flask

build:

context: .

dockerfile: Dockerfile-flask

environment:

- DATABASE_URL

...Now, we can push images after we have built them:

docker login

docker compose -f docker-compose.yml -f docker-compose.prod.yml push

AWS set up

Set up ECS as per instructions.

Create a user and its access key at IAM console.

Export AWS_ACCESS_KEY and AWS_ACCESS_SECRET env variables and run:

docker context create ecs my-handicapped-pet

Select "AWS environment variables".

And to check results:

docker context ls

Make this context current:

docker context use my-handicapped-pet

Then, theoretically, following command will bring up my services:

docker compose -f docker-compose.yml -f docker-compose.prod.yml up

Terraform

Actually I've never made the command above work.

There are some peculiarities in my docker-compose configuration that make it not very portable, such as external volumes and networks. Beside of that, there are a lot of configuration aside of docker containers, such as host network, disks and memory. If using VPS it wasn't too much (thought still not very good), here in serverless this burden starts to be overwhelming. So it is a good chance to describe all infrastructure as a code, and get rid of some parts which I had being managing manually, and which already had had me run into some problems.

So, Terraform looks as an industry standard. So let give it a chance.

Before starting, lets delete all manually added configuration, beside of user. So Terraform configuration will be applied from empty slate.

Here are some guides (1) (2) (Fargate) (3) (4) (5) (6) (EC2) which I use as a reference beside of the official documentation.

EC2 or Fargate?

ECS can be run over EC2 or Fargate. There are lot of resources comparing them, to put it shortly, Fargate better when one have to flexibly reconfigure instances. As guys from one devops Discord server put it, Fargate require more initial investment to configuration but will pay it with less technical overhead when it goes forward.

I chose EC2 for now since I have only 1 server. And I already spent much time on this annoying stuff. Though, ofcourse, later I may regret this decision.

Installation

Terraform is shipped as a single executable, and its installation is pretty straightforward. I refer to the instructions though on my machine Hashicorp's APT repo haven't work out, and I simply downloaded an executable.

Common configuration

In Terraform, project or module is simply a directory, which contains number of *.tf files, which all are born equal, and separated only for the ease of readability.

I'll separate my main module to input arguments, output attributes (if any), internal configuration, and resource.

variables.tf- input variables.main.tf- resources.

My variables.tf looks like below.

variable "region" {

type = string

description = "The region which to create resources in"

default = "us-west-2"

}

variable "container_port" {

type = number

description = "Container port"

default = 400

}

variable "env" {

type = string

description = "Environment to provision: prod|staging"

default = "prod"

}

variable "image_list" {

type = list(string)

description = "List of images that have to be rebuilt"

default = []

}

In main.tf, first include hashicorp/aws resource provider. Version is the latest available version.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.52"

}

}

}

provider "aws" {

region = var.region

}

Than, run

terraform initNetwork

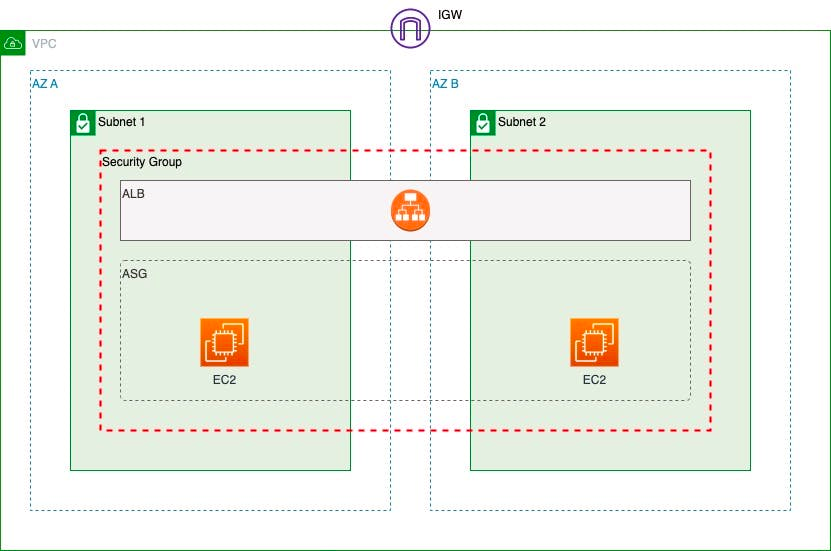

For networking Amazon use VPC (Virtual Private Cloud) which is a pool of network addresses which can be resolved to the endpoints in the multiple availability zones. It does not use docker network interfaces (though can be achieved by using netwrokType="bridge").

It is possible to use VPC and subnets created by default, by I'll create my own in order to further use different VPC for different environments.

Picture that persists in almost any article on ECS

resource "aws_vpc" "my-handicapped-vpc" {

cidr_block = "172.0.0.0/16"

enable_dns_hostnames = true

tags = {

name = "my-handicapped-vpc"

}

}

resource "aws_subnet" "my-handicapped-subnet-1" {

vpc_id = aws_vpc.my-handicapped-vpc.id

cidr_block = cidrsubnet(aws_vpc.my-handicapped-vpc.cidr_block, 8, 1)

map_public_ip_on_launch = true

availability_zone = "${var.region}a"

}

resource "aws_subnet" "my-handicapped-subnet-2" {

vpc_id = aws_vpc.my-handicapped-vpc.id

cidr_block = cidrsubnet(aws_vpc.my-handicapped-vpc.cidr_block, 8, 2)

map_public_ip_on_launch = true

availability_zone = "${var.region}b"

}

resource "aws_security_group" "my-handicapped-load-balancer" {

name = "my-handicapped-load-balancer"

description = "Control access to the application load balancer"

vpc_id = "${aws_vpc.my-handicapped-vpc.id}"

ingress {

protocol = "tcp"

from_port = 80

to_port = 80

self = false

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

protocol = "tcp"

from_port = 443

to_port = 443

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

EC2 instance

An instance can be declared right away, or through an autoscaling group; though, I am planning to have one instance only for a time, will create an autoscaling group for the convenience of the further scaling.

In my case, stateless load-balance between the instance won't work (at least for some services, e.g. I have a proxy which opens a headless browser on behalf of user, all request from the same user have to go to the same instance. TODO figure out how to implement it).

Launch template is a blueprint for creating EC2 instances. (Earlier there had been launch configurations, but launch templates are newer and more advanced feature).

resource "aws_launch_template" "my-handicapped-launch-template" {

name_prefix = "my-handicapped-"

image_id = "ami-0eb9d67c52f5c80e5"

instance_type = "t3.small"

vpc_security_group_ids = [aws_security_group.my-handicapped-load-balancer.id]

user_data = base64encode("#!/bin/bash\necho ECS_CLUSTER=my-handicapped-cluster >> /etc/ecs/ecs.config")

block_device_mappings {

device_name = "/dev/sda1"

ebs {

volume_type = "gp2"

volume_size = 64

}

}

network_interfaces {

associate_public_ip_address = true

}

}

Image ID is one of Amazon provided VM images, I took a latest Amazon Linux.

Instance type defines size of the instance (memory and CPU units). It must be comparable by architecture with the instance type.

The user_data parameter is a stunt to make AWS create containers within specified cluster. Otherwise they'll be created in the default cluster.

resource "aws_autoscaling_group" "my-handicapped-autoscaling-group" {

name_prefix = "my-handicapped-"

vpc_zone_identifier = [aws_subnet.my-handicapped-subnet-1.id, aws_subnet.my-handicapped-subnet-2.id]

max_size = 1

min_size = 1

health_check_grace_period = 300

health_check_type = "EC2"

launch_template {

id = aws_launch_template.my-handicapped-launch-template.id

version = "$Latest"

}

tag {

key = "Name"

propagate_at_launch = true

value = "my-handicapped-pet"

}

tag {

key = "AmazonECSManaged"

propagate_at_launch = true

value = ""

}

}

Autoscaling group defines number of instances created.

Storage

I still can use docker built-in volumes, but they will be mounted to the disk of the VM, which is not good in case of scaling or upgrade it. The other options are EFS (Elastic File System) and EBS (Elastic Block Store).

Volumes that are attached to tasks that are managed by a service aren't preserved and are always deleted upon task termination.

EBS is that is used as a disk attached to the EC2 by default. EBS also cannot be shared within EC2 instances. So, I can have one EBS attached to the instance, and go with docker volumes within it. In the instance template above, block_device_mappings section provide the size of the root device storage. /dev/sda1 is supposedly a root mounting point on the Linux images.

Images

Before deploying, I have to build docker images and push them to the AWS's own registry. For this aim, let create a module which builds and deploys an image.

Module is a folder containing a number of *.tf files.

TODO describe it

Task definition

Task definition is a JSON document describing docker services, similar to docker-compose.yml.

My services have to have access to the secrets. The common place to store them in Amazon is Secrets Manager. And they can be passed to the environment variables in the task definition.

So, I create a secret in Secrets Manager, named, for example, my-handicapped-pet/prod, and access it in my config,

data "aws_secretsmanager_secret" "my-handicapped-pet" {

name = "my-handicapped-pet/${var.env}"

}

To have access to the Secrets Manager inside the task, I'll have to write another boilerplate of shit:

data "aws_iam_policy_document" "task-execution-iam-role-document" {

version = "2012-10-17"

statement {

sid = ""

effect = "Allow"

principals {

type = "Service"

identifiers = ["ecs-tasks.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

resource "aws_iam_role" "task-execution-iam-role" {

name = "task-execution-iam-role"

assume_role_policy = data.aws_iam_policy_document.task-execution-iam-role-document.json

}

resource "aws_iam_role_policy_attachment" "task-execution-iam-role-policy-attachment" {

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

role = aws_iam_role.task-execution-iam-role.name

}

Now, the task definition itself.

resource "aws_ecs_task_definition" "my-handicapped-task-definition" {

family = "my-handicapped-pet"

execution_role_arn = aws_iam_role.task-execution-iam-role.arn

memory = 4096

network_mode = "awsvpc"

container_definitions = jsonencode([

{

name = "flask"

image = "myhandicappedpet/webapp-flask:latest"

essential = true

portMappings = []

containerPort = var.container_port

secrets = [

{

name = "DATABASE_URL"

valueFrom = data.aws_secretsmanager_secret.my-handicapped-pet.arn

}

]

}, {

name = "nginx"

image = "myhandicappedpet/webapp-nginx:latest"

essential = true

portMappings = [

{

containerPort = 80

hostPort = 80

},

{

containerPort = 443

hostPort = 443

}

]

containerPort = var.container_port

mountPoints = [

{

sourceVolume = "certbot_www"

containerPath = "/var/www/certbot/"

},

{

sourceVolume = "ssl_certs"

containerPath = "/etc/letsencrypt/"

}

]

dependsOn = [

{

containerName = "flask"

condition = "START"

}, {

containerName = "proxy"

condition = "START"

}

]

}, {

name = "certbot"

image = "myhandicappedpet/certbot:latest"

essential = true

portMappings = []

containerPort = var.container_port

mountPoints = [

{

sourceVolume = "certbot_www"

containerPath = "/var/www/certbot/"

},

{

sourceVolume = "ssl_certs"

containerPath = "/etc/letsencrypt/"

}

]

}, {

name = "proxy"

image = "myhandicappedpet/webapp-proxy:latest"

essential = true

portMappings = []

containerPort = var.container_port

secrets = [

{

name = "ENV"

valueFrom = data.aws_secretsmanager_secret.my-handicapped-pet.arn

}

]

}, {

name = "chrome1"

image = "alpeware/chrome-headless-trunk"

essential = true

portMappings = []

containerPort = var.container_port

mountPoints = [

{

sourceVolume = "chromedata"

containerPath = "/data"

}

]

}

], )

volume {

name = "certbot_www"

docker_volume_configuration {

scope = "shared"

autoprovision = true

}

}

volume {

name = "ssl_certs"

docker_volume_configuration {

scope = "shared"

autoprovision = true

}

}

volume {

name = "chromedata"

docker_volume_configuration {

scope = "task"

}

}

}

As you can see, it's pretty similar to the composer definitions, just more verbose.

Service

...

Back to the USSR docker-compose

With the configuration above I could enjoy the error message "No Container Instances were found in your cluster". Googling realized that this error is raised for any kind of problem - from connectivity to their combersome permission system to custom config files.

So, after some struggling, I decided not to do more complex solution than I need, and step back to the docker (with compose) hosted on a single machine.

However, I decided to stay with Terraform, in case I'll need once again migrate to the other host image or region.

I don't want to explain in details changes to make a Terraform configuration with docker compose. Just writing here the final configuration.

So, main module.

main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.54"

}

}

backend "s3" {

bucket = "my-handicapped-bucket"

key = "terraform/state"

region = "us-west-2"

}

}

provider "aws" {

region = var.region

}

data "aws_vpc" "default" {

default = true

}

data "aws_subnets" "default" {

filter {

name = "vpc-id"

values = [data.aws_vpc.default.id]

}

}

data "external" "get-public-ip" {

program = ["curl", "http://api.ipify.org?format=json"]

}

resource "aws_security_group" "my-handicapped-security-group" {

name = "my-handicapped-security-group"

description = "Allow all HTTP/HTTPS traffic"

vpc_id = "${data.aws_vpc.default.id}"

ingress {

protocol = "tcp"

from_port = 80

to_port = 80

self = false

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

protocol = "tcp"

from_port = 443

to_port = 443

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

# Staging

ingress {

protocol = "tcp"

from_port = 8080

to_port = 8080

self = false

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

protocol = "tcp"

from_port = 8043

to_port = 8043

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

ingress {

protocol = "icmp"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

# Current IP for executing SSH command via CI/CD

ingress {

protocol = "tcp"

from_port = 22

to_port = 22

cidr_blocks = ["${data.external.get-public-ip.result.ip}/32"]

}

# Similar blocks should be created for all developer machines

ingress {

protocol = "tcp"

from_port = 22

to_port = 22

cidr_blocks = ["186.4.53.135/32"]

}

# PuPI

ingress {

protocol = "tcp"

from_port = 3141

to_port = 3141

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

module "aws-iam-role-for-ecr" {

source = "./modules/aws-iam-role"

action_list = ["ecr:*"]

name = "ec2-role-my-handicapped-pet"

}

resource "aws_iam_instance_profile" "my-handicapped-instance-profile" {

name = "my-handicapped-instance-profile"

role = module.aws-iam-role-for-ecr.name

}

resource "aws_key_pair" "instance-auth" {

key_name = "aws_my_handicapped_pet"

public_key = file("${var.cert_path}/aws_my_handicapped_pet.pub")

}

resource "aws_instance" "my-handicapped-instance" {

ami = "ami-0b20a6f09484773af"

instance_type = "t3.small"

key_name = aws_key_pair.instance-auth.key_name

root_block_device {

volume_size = 64

}

user_data = <<-EOF

#!/bin/bash

set -ex

sudo yum update -y

sudo yum install -y docker

sudo service docker start

sudo usermod -a -G docker ec2-user

EOF

vpc_security_group_ids = [aws_security_group.my-handicapped-security-group.id]

iam_instance_profile = aws_iam_instance_profile.my-handicapped-instance-profile.name

monitoring = true

disable_api_termination = false

ebs_optimized = true

tags = {

Name = "my-handicapped-instance"

}

}

# TODO: add a common configuration service and connect it to the Secrets Manager.

# (currently everything is in GitHub secrets > ENV variables)

#data "aws_secretsmanager_secret" "my-handicapped-pet" {

# name = "my-handicapped-pet/${var.env}"

#}

# check out all needed sources

module "git-checkout" {

for_each = toset(flatten([for k in var.image_list : local.config[k]["repo"]]))

source = "./modules/git-checkout"

repo = "${each.value}"

}

# build dokcer images

# for all the requested services

module "docker-image" {

depends_on = [module.git-checkout]

for_each = zipmap(var.image_list, [for k in var.image_list : local.config[k]["dockerfile"]])

source = "./modules/docker-image"

name = each.key

dockerfile = each.value

}

# execute remotely docker-compose to bring all services up

resource "null_resource" "docker-compose-up" {

depends_on = [module.docker-image]

triggers = {

always = "${timestamp()}"

}

provisioner "remote-exec" {

connection {

host = aws_instance.my-handicapped-instance.public_dns

user = "ec2-user"

private_key = file("${var.cert_path}/aws_my_handicapped_pet")

}

script = "./bin/wait-instance.sh"

}

provisioner "local-exec" {

command = "./bin/deploy.sh ${aws_instance.my-handicapped-instance.public_dns}"

environment = {

ENV = var.env

DOCKER_HOST = "ssh://ec2-user@${aws_instance.my-handicapped-instance.public_dns}"

}

}

}

# elastic IP (afterit's created, manually update Route 53 zone records.

# seems a little bit complicated to write configuration for this.)

resource "aws_eip" "my-handicapped-elastic-ip" {

instance = aws_instance.my-handicapped-instance.id

domain = "vpc"

}

Variables passed to the main module, variables.tf.

variable "region" {

type = string

description = "The region which to create resources in"

default = "us-west-2"

}

variable "cert_path" {

type = string

description = <<-EOF

Path to the SSH certificates for the machine provisioning.

This path must contain `ssh-keygen`-generated key pair:

aws_my_handicapped_pet

aws_my_handicapped_pet.pub

EOF

default = "."

}

variable "env" {

type = string

description = "Environment to provision: prod|staging"

default = "prod"

}

variable "image_list" {

type = list(string)

description = "List of images that have to be rebuilt"

default = []

}

local-config.tf

# describe how to build an image

# image name -> {"repo": [repo1, repo2], "dockerfile": "repo1/Dokcerfile"}...

locals {

config = {

webapp-flask = {

repo = ["ilyaukin/sadist-be", "ilyaukin/sadist-fe"]

dockerfile = "./sadist-be/Dockerfile-flask"

}

webapp-nginx = {

repo = ["ilyaukin/sadist-be"]

dockerfile = "./sadist-be/Dockerfile-nginx"

}

certbot = {

repo = ["ilyaukin/sadist-be"]

dockerfile = "./sadist-be/Dockerfile-certbot"

}

webapp-proxy = {

repo = ["ilyaukin/sadist-proxy"]

dockerfile = "./sadist-proxy/Dockerfile-proxy"

}

blog-app = {

repo = ["ilyaukin/sadist-blog"]

dockerfile = "./sadist-blog/Dockerfile-blog"

}

blog-admin-app = {

repo = ["ilyaukin/sadist-blog"]

dockerfile = "./sadist-blog/Dockerfile-blog-admin"

}

}

}

Module to build a docker image and upload it to the registry. It is located in modules/docker-build and consist of the following files.

main.tf

# if we build "flask", than frontend sources also

# have to be built and copied to the "flask" image

resource "null_resource" "build-frontend" {

count = var.name == "webapp-flask" ? 1 : 0

triggers = {

always_run = "${timestamp()}"

}

provisioner "local-exec" {

command = "./bin/build-frontend.sh"

}

}

# build and push the image

resource "null_resource" "build-image" {

depends_on = [null_resource.build-frontend]

triggers = {

always_run = "${timestamp()}"

}

provisioner "local-exec" {

command = "./bin/build-image.sh ${var.dockerfile} myhandicappedpet/${var.name}"

}

}

variables.tf

variable "name" {

type = string

description = "Name of the docker image"

}

variable "dockerfile" {

type = string

description = "Path and name of the Dockerfile"

}

Additional module for checkout from git, it is located in modules/git-checkout and consist of the following files.

main.tf

resource "null_resource" "checkout" {

triggers = {

always_run = "${timestamp()}"

}

provisioner "local-exec" {

command = "./bin/git-clone.sh ${var.repo}"

}

}

variables.tf

variable "repo" {

description = "GitHub repo name"

type = string

}Another module for creating a user and a role in AWS, initially it was needed to pull images from ECR (AWS's own registry), but then since I moved to Docker Hub, and since it anyway does not work, it's probably not needed anymore, but let keep it for the future. It is located in modules/aws-iam-role and consists of the following files.

main.tf

#here is the pot with shit

data "aws_iam_policy_document" "assume-role" {

version = "2012-10-17"

statement {

sid = ""

effect = "Allow"

principals {

type = "Service"

identifiers = ["${var.service}.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

resource "aws_iam_role" "role" {

name = "ServiceRole-${var.name}"

assume_role_policy = data.aws_iam_policy_document.assume-role.json

}

data "aws_iam_policy_document" "policy" {

count = var.action_list != null ? 1 : 0

version = "2012-10-17"

statement {

sid = ""

effect = "Allow"

actions = var.action_list

resources = ["*"]

}

}

resource "aws_iam_role_policy" "policy" {

count = var.action_list != null ? 1 : 0

name = "${var.name}"

role = aws_iam_role.role.id

policy = data.aws_iam_policy_document.policy[0].json

}

resource "aws_iam_role_policy_attachment" "policy-attachment" {

count = var.policy != null ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/service-role/${var.policy}"

role = aws_iam_role.role.name

}

output "id" {

value = aws_iam_role.role.id

}

output "arn" {

value = aws_iam_role.role.arn

}

output "name" {

value = aws_iam_role.role.name

}

variables.tf

variable "service" {

description = "One of Amazon's three-letter service, e.g. 'ec2'"

type = string

default = "ec2"

}

variable "policy" {

description = "One of the AWS built-in policies #see https://us-east-1.console.aws.amazon.com/iam/home?region=us-west-2#/policies"

type = string

default = null

}

variable "action_list" {

description = "List of actions... somewhat hardcoded in Amazon... good thing is that action name (part after the semicolon) can be wildcarded"

type = list(string)

default = null

}

variable "name" {

description = "Name of the role, normally name of the project etc."

type = string

}Beside of that, some shell scripts.

bin/git-clone.sh

#!/bin/bash

set -x

if [ -z "$GITHUB_ACCESS_TOKEN" ]; then

echo "GITHUB_ACCESS_TOKEN must be set" >&2

exit 1

fi

token=$GITHUB_ACCESS_TOKEN

actor=${GITHUB_ACTOR:-GitHub}

actor_email=${GITHUB_ACTOR_EMAIL:-noreply@github.com}

repo=$1

IFS='/' read -ra A <<< "$repo"

reponame="${A[-1]}"

dirnam=${2:-$reponame}

# make script idempotent:

# keep using the same repo if we checked out it earlier

if [ -d "$dirnam" ]; then

exit 0

fi

mkdir "$dirnam"

(cd "$dirnam" && \

git clone https://ilyaukin:$token@github.com/$repo.git . && \

git config --local user.name "$actor" && \

git config --local user.email "$actor_email")

bin/build-image.sh

#!/bin/bash

set -x

if [ -z $DOCKER_ACCESS_TOKEN ]; then

echo "DOCKER_ACCESS_TOKEN must be set" >&2

exit 1

fi

dockerfile=$1

path=$(dirname $dockerfile)

image=$2

tag=latest

docker build -f $dockerfile -t $image $path

echo $DOCKER_ACCESS_TOKEN | docker login -u myhandicappedpet --password-stdin

docker tag $image $image:$tag

docker push $image:$tag

bin/deploy.sh

#!/bin/bash

set -x

#parameters

host=$1

key=aws_my_handicapped_pet

#set up agent if we aren't already set

if [ -z $SSH_AUTH_SOCK ]; then

export SSH_AUTH_SOCK=/tmp/ssh-agent.sock

ssh-agent -a $SSH_AUTH_SOCK > /dev/null

fi

#make the target machine trusted

mkdir -p ~/.ssh

ssh-keygen -F $host

if [ $? != 0 ]; then

ssh-keyscan $host >> ~/.ssh/known_hosts

fi

#copy and add ssh key

cp $key ~/.ssh/

cp $key.pub ~/.ssh/

chmod 600 ~/.ssh/$key

ssh-add ~/.ssh/$key

#test connection

ssh -l ec2-user $host docker system dial-stdio

docker run -i -e DATABASE_URL myhandicappedpet/webapp-flask python -m scripts.apply_migrations

export COMPOSE_PROJECT_NAME=$ENV

docker compose -f docker-compose.yml -f docker-compose."$ENV".yml pull

docker compose -f docker-compose.yml -f docker-compose."$ENV".yml up -d --force-recreate

Moralité

Nowdays everyone tends to write conclusions, even ChatGPT put them after each response... So I won't break the fashion and will write mine.

Fisrt thing that I realized from the andations described in this post (so far the longest in this blog) is not to implement more sophisticated solutions that I really need. MInimal working solution would be the best, and even it can appear more complex than it initialy looks like.

Second, AWS infra is overcomplicated. It keeps exponentiallly grow (I saw somewhere a graph of number of their services over time), and their boasted "better integratoin with other Amazon services" does not always works as expected. I don't know the statistics, but probably for most customers AWS equals to EC2. At least, for me it is the only their servise that worked out without stunts. I wonder, however, which part of all possible three-letter acronims have they already used for their servises' names.